Deep Learning has long been the core technology driving Artificial Intelligence (AI). It is remarkable, however, for KRAFTON to present five papers for the main track of the world’s largest AI conference, the Conference on Neural Information Processing Systems (NeurIPS) 2023. This represented the highest number of submissions by any South Korean game company. Especially as the acceptance rate for the major top-tier AI-related conferences is a mere 26%. You can now learn about two of these papers in detail on the KRAFTON Blog. For those interested in AI and deep learning, you can’t afford to miss it!

Nice to meet you. Can you please introduce yourself?

Keon Lee: Hello, I’m Keon Lee from the KRAFTON AI Research Center. I conduct research on text-to-speech (TTS) technology. Recently, I participated in a research and development program for custom and multilingual TTS based on Language Models. In addition to TTS, I have a deep interest in Generative AI and participated in a paper presented at the recent NeurIPS, “Censored Sampling of Diffusion Models Using 3 Minutes of Human Feedback.”

Bumsoo Park: Hello, I’m Bumsoo Park from the KRAFTON AI Research Center. I keep track of recent updates in computer vision and graphics, exploring ways to apply them to the game industry. I conducted research on creating 3D-animated characters through reinforcement learning for my master’s degree. This experience proved valuable when I participated in the paper “Discovering Hierarchical Achievements in Reinforcement Learning via Contrastive Learning,” presented at this year’s NeurIPS.

Jaewoong Cho: Hi, I’m Jaewoong Cho. I lead the Core Research Team at the KRAFTON Deep Learning Division.

*Mr. Jaewoong Cho was on a business trip in the U.S., so his interview was conducted separately.

I heard KRAFTON presented five papers at the NeurIPS 2023, the world’s largest AI conference. Could you provide some details about the papers you participated in? What are some of the major discoveries and their implications? Additionally, how can these findings be applied to the game industry?

Keon Lee: I collaborated with Professor Ernest Ryu of Seoul National University and Professor Albert No of Hongik University in researching censoring techniques based on human feedback to prevent AI from generating undesirable images (Censored Sampling of Diffusion Models Using 3 Minutes of Human Feedback). (Link to paper)

We live in an era where images can be created quickly through text descriptions, yielding high-quality images at a low cost. These images can also be used in game production. At the heart of this advancement is the development of the generative model Diffusion.

At the outset, Diffusion would often generate images that did not align with the input texts, depending on the data used for training and the learning method. I often received feedback that people were startled after generating AI images at work, causing them to look back in surprise. To address this, our paper developed an image censoring method that prevents the creation of undesirable images by using a small amount of human feedback (malign/benign) on the images generated by the model without incurring the high cost of retraining the already trained Diffusion model. The Diffusion model can produce high-quality images. By preventing the generation of undesirable images, game producers using AI image generators can leverage it more broadly.

Bumsoo Park: I did research with Professor Hyun Oh Song’s team at Seoul National University on hierarchical reinforcement learning under various visual changes in procedurally generated environments. (Link to paper)

Game agent reinforcement learning is a methodology in which the system, acting as the agent in the game, learns to play the game by exploring the game settings. The resulting game agent can be used for game trials or to create a new gaming experience for actual users. However, challenges persist in agent learning for game visuals that undergo continuous changes or when a series of complicated tasks must be solved. In the research, we attempted to address this problem by reinforcing contrastive learning. Ultimately, we developed an algorithm that functioned effectively even as game visuals underwent constant changes and enabled the agent to comprehend and learn to solve a series of complicated tasks. This algorithm demonstrates an improved performance of approximately 50% compared to the DreamerV3 algorithm recently showcased by DeepMind.

Through this research, we discovered that combining Policy Gradient-based reinforcement learning with contrastive learning allows us to develop a more effective method for hierarchical achievements. This new reinforcement learning approach exhibits superior performance under visually diverse contrastive learning with fewer model parameters compared to the previous method.

This will facilitate the development of reinforcement learning agents capable of operating in large open-world games.

What was the greatest challenge during the research?

Keon Lee: It was an industry-academia cooperation project, so there were no issues directly related to the planning or development of ideas. But if I had to point one out, we devoted a significant amount of energy on communication when experiments were conducted separately, and results had to be collected. This is due to our different work styles and background knowledge. With meetings and progress sharing conducted online, synchronizing efforts required a significant amount of time and effort. Even then, we were a great team, and because we all did our best, the project yielded good results.

Bumsoo Park: Choosing the topic was the most difficult part. I spent four months exploring various topics, searching for something promising. However, most were either impractical or too simple for research.

During this period, I also studied Minecraft. However, I found that papers on Minecraft consistently mentioned high GPU usage and time consumption. As a result, I decided to shift my focus to a simpler game and continue my studies.

Since you brought it up, how are paper topics decided, and what is the process in which research is conducted? Working for a private enterprise, you may not always have the opportunity to conduct the research you desire.Jaewoong Cho: We began by identifying and implementing the necessary technology to achieve long-term goals and address challenges that must be overcome. Afterward, we determine the required number of researchers and assemble those who are interested to discuss ideas and develop solutions. As long as the research topic aligns with our long-term goals, researchers have considerable freedom. Even if you don’t take on a leading role, you are free to participate in the project of your choice.

Thank you for elaborating. This is a more general question. Why does KRAFTON present research on deep learning at conferences?

Jaewoong Cho: Through peer review at prominent conferences, KRAFTON’s technology can receive validation from deep learning researchers internationally. This also enables us to stay on top of recent trends in deep learning research and adjust our research topics accordingly. These activities will strengthen KRAFTON’s next-generation technology, showcase our capabilities to the world, and create opportunities to attract talented individuals to KRAFTON.

Where does KRAFTON go from here with AI research?

Jaewoong Cho: Recent developments in generative AI have made it increasingly more common in our everyday lives. Many enterprises are trying to obtain and apply AI technology to their business, and we can divide these enterprises into two groups. The first group comprises players who focus on directly training basic AI models using large datasets. The second group consists of players who prefer to develop systems for specific tasks using pre-trained basic AI models instead of training them from scratch. KRAFTON belongs to the latter group. Our goal is to use the basic models to create systems for game production and implement new user experiences with AI technology. This involves using image-generating AI with small datasets to produce images in desired styles, as well as developing game agents. These game agents are designed to understand and play games while communicating using language, achieved by integrating Large Language Models (LLM) with reinforcement learning. KRAFTON is studying the effectiveness of the basic models in identifying and addressing potential issues that may arise when assembling and implementing a system.

What are some major milestones that KRAFTON has reached up to this point?

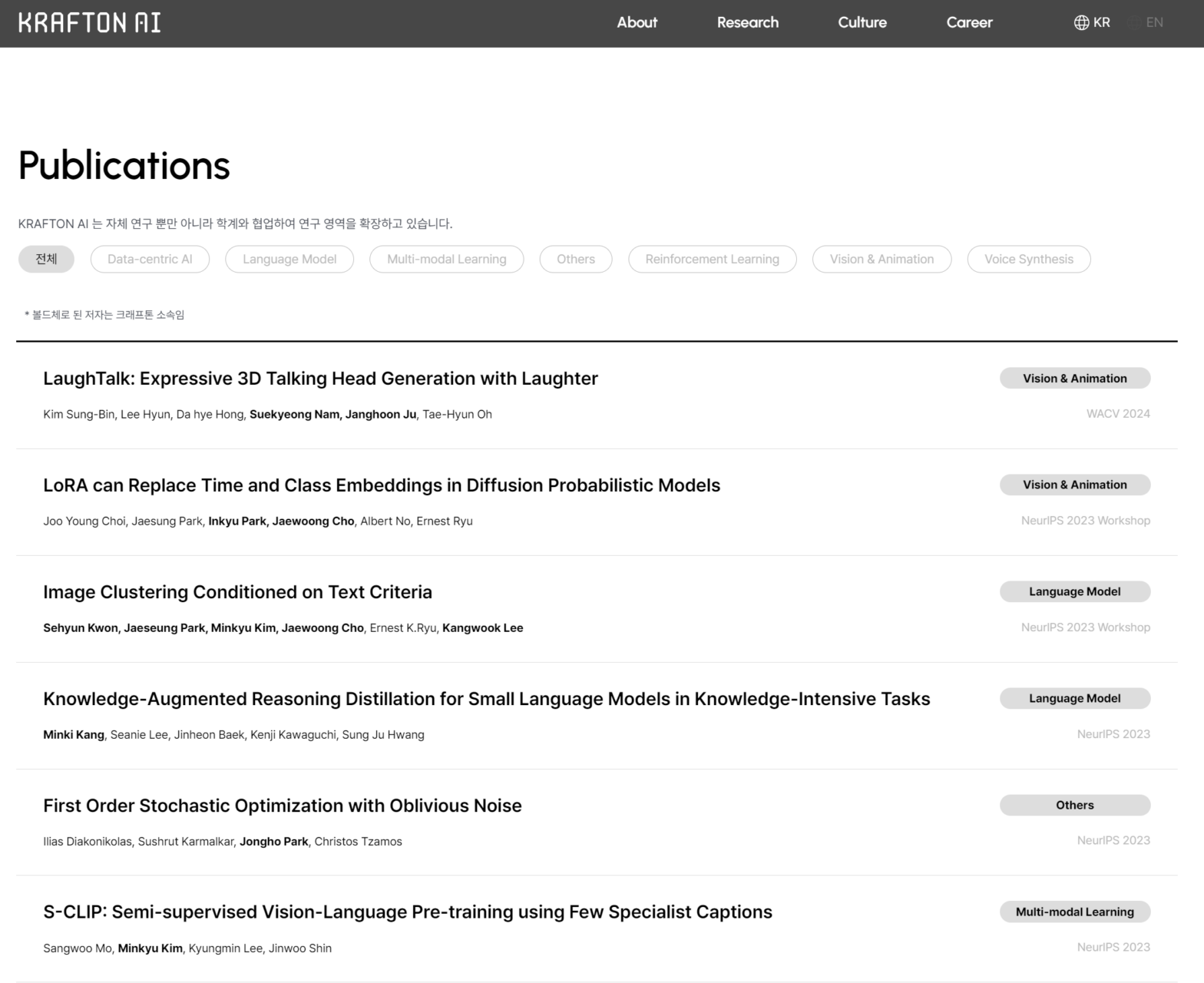

Jaewoong Cho: In addition to the advancements Keon Lee and Bumsoo Park discussed, like preventing inappropriate image generation with diffusion models and enhancing hierarchical reinforcement learning, we’ve also made strides in other areas. These include chatbots powered by LLM and memory, LLM acceleration, and the research and development of custom/multilingual Text-to-Speech (TTS) using language models, among other advancements. This year alone, KRAFTON has presented ten main track papers and six workshop papers at international AI conferences such as NeurIPS (a top-tier machine learning conference), ACL (a computational linguistics conference), and COLT (a learning theory conference). Detailed information on research development can be found on our Deep Learning Division homepage (https://www.krafton.ai/ko/research/publications/).

What role does KRAFTON’s Deep Learning Division expect AI technology to play in the company’s future?

Jaewoong Cho: We see it playing two roles. One, AI technology will bring “innovative efficiency to game production” and enable various bold attempts. Two, the development of the “Virtual Friend” will create a new and enjoyable experience for users.

When conducting AI research, is there a philosophy or principle that you think is important?

Keon Lee: I started research on AI because I was fascinated by the amazing results, and I enjoyed the process of verifying a hypothesis. However, as the scope of AI research, especially generative AI, continues to influence the daily lives of the general public, I believe it is crucial for me to regularly reflect on my research and its impact on the research community, our society, and humanity, questioning whether it will ultimately contribute to happiness.

Bumsoo Park: During the research process, I try to treat the AI as if it were a person. When conducting research on AI, there are times when we assign tasks that it cannot perform. If I conceptualize AI as a person, it helps me maintain awareness and ensure I am not asking for something impossible. Additionally, when analyzing phenomena and results, I strive to comprehend them as if understanding the thoughts of a person.

This is the last question. In your own words, what is deep learning?

Keon Lee: I believe it is “the best method for humanity to explore itself inductively.” Humanity constantly strives to understand and explore itself. When we unlock the secrets of our brains, I believe a new world will open. However, until now, deciphering the brain deductively has been difficult. In my view, deep learning can fulfill this aspiration of humanity. It’s like deep learning is playing a game, mimicking humans to acquire the ability to execute tasks better than humans. Becoming the winner of this game is simple. It’s all about thoroughly observing and analyzing oneself and then maximizing these insights with immense computing power.

Bumsoo Park: I consider deep learning to be the “key that opens all doors.” I remember when deep learning first emerged. Many university labs were shifting their focus to machine learning. But, as time passes, I believe that more and more fields will research and employ deep learning. I think deep learning is a versatile tool because it also holds promise in areas outside of engineering, such as art and literature.